Azure Data Factory: 7 Powerful Features You Must Know

Ever wondered how companies process terabytes of data daily without breaking a sweat? Meet Azure Data Factory—the powerhouse behind seamless cloud data integration. It’s not magic; it’s Microsoft’s intelligent ETL tool that orchestrates data workflows across clouds and on-premises systems with ease.

What Is Azure Data Factory and Why It Matters

Azure Data Factory (ADF) is Microsoft’s cloud-based data integration service that allows organizations to create data-driven workflows for orchestrating and automating data movement and transformation. Think of it as the conductor of an orchestra—coordinating various data sources, processing engines, and destinations to deliver timely, accurate insights.

Core Definition and Purpose

Azure Data Factory enables the creation of data pipelines that ingest, transform, and move data from diverse sources to destinations like Azure Synapse Analytics, Azure Data Lake Storage, or Power BI. Unlike traditional ETL tools, ADF is serverless, meaning you don’t manage infrastructure—just define your workflows.

- Supports both batch and real-time data integration

- Enables hybrid data scenarios (cloud + on-premises)

- Integrates with Azure Databricks, HDInsight, SQL Server, and more

Evolution from SSIS to Cloud-Native

For years, SQL Server Integration Services (SSIS) was the go-to for ETL in Microsoft environments. But as data moved to the cloud, SSIS faced scalability and flexibility challenges. Azure Data Factory emerged as its cloud-native successor, offering elasticity, global reach, and seamless integration with modern data platforms.

Microsoft even introduced Azure-SSIS Integration Runtime to help migrate existing SSIS workloads to the cloud—ensuring legacy investments aren’t wasted.

“Azure Data Factory is not just a tool; it’s a paradigm shift in how we think about data integration in the cloud era.” — Microsoft Azure Documentation

Key Components of Azure Data Factory

To master Azure Data Factory, you need to understand its building blocks. Each component plays a critical role in designing scalable, maintainable data pipelines.

Data Pipelines and Activities

A pipeline in azure data factory is a logical grouping of activities that perform a specific task. For example, a pipeline might copy data from an on-premises SQL Server to Azure Blob Storage, then trigger a data transformation using Azure Databricks.

- Copy Activity: Moves data between supported data stores

- Transformation Activities: Includes Data Flow, HDInsight, Azure Functions, etc.

- Control Activities: Manage workflow logic (e.g., If Condition, ForEach, Execute Pipeline)

Linked Services and Datasets

Linked Services define the connection information to external resources—like a database connection string. Datasets, on the other hand, represent the structure of the data within those stores.

For instance, a linked service might connect to an Azure SQL Database, while a dataset defines which table or view to use. Together, they enable activities to know where to get and put data.

Integration Runtime (IR)

The Integration Runtime is the compute infrastructure that ADF uses to run activities. There are three types:

- Azure IR: Runs in the cloud, ideal for cloud-to-cloud data movement

- Self-Hosted IR: Installed on-premises for secure access to local data sources

- SSIS IR: Specifically for running SSIS packages in Azure

Choosing the right IR is crucial for performance and security, especially in hybrid environments.

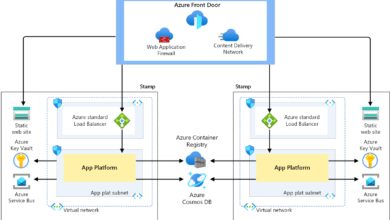

How Azure Data Factory Enables Hybrid Data Integration

One of the standout strengths of azure data factory is its ability to bridge on-premises and cloud systems. In today’s hybrid IT landscape, data lives everywhere—from legacy ERP systems to SaaS applications like Salesforce.

Secure Connectivity with Self-Hosted IR

The Self-Hosted Integration Runtime acts as a secure bridge between Azure and your internal network. It runs as a Windows service on a local machine, enabling ADF to access databases like Oracle, MySQL, or file shares behind firewalls.

It uses outbound HTTPS communication only, minimizing security risks. You can even scale it across multiple nodes for high availability and load balancing.

Support for On-Premises and Cloud Sources

Azure Data Factory supports over 100 connectors, including:

- On-premises: SQL Server, Oracle, IBM DB2, SAP

- Cloud: Amazon S3, Google BigQuery, Salesforce, Snowflake

- File-based: CSV, JSON, Parquet, XML

This breadth of connectivity makes ADF a universal translator for enterprise data.

Real-World Use Case: Migrating Legacy Data

Consider a financial institution moving from an on-premises data warehouse to Azure Synapse. Using ADF, they can schedule nightly syncs via Self-Hosted IR, transform data using Data Flows, and load it into Synapse—all without exposing internal systems to the public internet.

This kind of migration is not only secure but also incremental, reducing downtime and risk.

Data Transformation Capabilities in Azure Data Factory

While data movement is essential, transformation is where real value is created. azure data factory offers multiple ways to transform data, from code-free visual tools to full programming flexibility.

Mapping Data Flows: No-Code Transformation

Mapping Data Flows is ADF’s visual, drag-and-drop transformation engine. Built on Apache Spark, it allows users to define transformations without writing code.

- Supports data cleansing, aggregation, joins, and pivoting

- Auto-generates Spark code in the background

- Runs on a serverless Spark environment—no cluster management needed

It’s ideal for data engineers and analysts who want to build ETL logic quickly and visually.

Integration with Azure Databricks and HDInsight

For advanced analytics and machine learning, ADF integrates seamlessly with Azure Databricks and HDInsight. You can trigger notebooks written in Python, Scala, or SQL as part of a pipeline.

For example, after ingesting customer data, a pipeline can invoke a Databricks notebook to run a churn prediction model, then store the results in a database.

Learn more about integrating Databricks with ADF: Databricks Notebook Activity.

Custom Logic with Azure Functions and Logic Apps

Sometimes, you need custom business logic. Azure Data Factory supports Azure Functions (serverless code) and Logic Apps (workflow automation) as activities.

Imagine validating a batch of invoices using a Python function—ADF can call that function, pass data, and react based on the result. This extensibility makes ADF adaptable to almost any use case.

Orchestration and Workflow Automation

At its core, azure data factory is a workflow orchestrator. It doesn’t just move data—it manages the entire lifecycle of data processing jobs.

Scheduling and Triggering Pipelines

Pipelines can be triggered in three ways:

- Schedule Trigger: Runs at specific times (e.g., daily at 2 AM)

- Tumbling Window Trigger: Ideal for time-series data processing

- Event-Based Trigger: Responds to file uploads in Blob Storage or events in Event Grid

This flexibility allows real-time, batch, or hybrid processing models.

Dependency Management and Control Flow

ADF supports complex workflows using control activities. You can:

- Use If Condition to branch based on metadata

- Loop through items with ForEach

- Call other pipelines with Execute Pipeline

- Wait for external systems with Wait Activity

This makes it possible to build dynamic, intelligent data pipelines that adapt to changing conditions.

Monitoring and Debugging Tools

The Azure portal provides a robust monitoring experience. You can:

- View pipeline run history and durations

- Inspect input/output of each activity

- Set up alerts using Azure Monitor

- Use the built-in debugger to test pipelines before deployment

These tools reduce troubleshooting time and improve pipeline reliability.

Security, Compliance, and Governance

In enterprise environments, security isn’t optional. azure data factory provides multiple layers of protection to ensure data integrity and compliance.

Role-Based Access Control (RBAC)

ADF integrates with Azure Active Directory (AAD) and supports RBAC. You can assign roles like:

- Data Factory Contributor: Can create and edit pipelines

- Reader: Can view but not modify resources

- Key Vault Reader: Access secrets stored in Azure Key Vault

This ensures least-privilege access across teams.

Data Encryption and Private Endpoints

All data in transit is encrypted using HTTPS/TLS. At rest, data is encrypted via Azure Storage’s encryption mechanisms.

For added security, you can deploy ADF with Private Endpoints, allowing communication over Azure Private Link—keeping traffic within the Microsoft backbone network.

Learn more: Azure Private Link for Data Factory.

Audit Logs and Compliance Standards

Azure Data Factory logs all operations to Azure Monitor and Log Analytics. These logs can be used for auditing, troubleshooting, and compliance reporting.

ADF complies with major standards including:

- GDPR

- ISO 27001

- SOC 1/2

- HIPAA

This makes it suitable for regulated industries like healthcare and finance.

Performance Optimization and Cost Management

While ADF is serverless and scales automatically, performance and cost depend on how you design your pipelines.

Optimizing Copy Activity Performance

The Copy Activity is often the most used—and misused—component. To optimize it:

- Use polybase or copy method for large Azure SQL transfers

- Enable parallel copy to increase throughput

- Use compression (e.g., GZip) for large files

- Choose the right Integration Runtime location (closest to source/destination)

Microsoft provides a performance tuning guide to help maximize efficiency.

Managing Data Flow Execution

Mapping Data Flows run on Spark clusters. To control costs:

- Select the appropriate cluster size (8, 16, 32 cores)

- Use auto-resize to scale based on data volume

- Enable graceful degradation for smaller datasets

- Cache transformations to avoid recomputation

Remember: larger clusters process faster but cost more. Balance speed and budget.

Cost Monitoring with Azure Pricing Calculator

Azure Data Factory pricing is based on:

- Pipeline runs (activity executions)

- Data movement (volume and frequency)

- Data Flow execution (core hours)

- Self-Hosted IR usage (if applicable)

Use the Azure Pricing Calculator to estimate costs before deployment. Monitor spending via Cost Management + Billing in the Azure portal.

Advanced Scenarios and Future Trends

As organizations mature in their data journey, they explore advanced use cases powered by azure data factory.

Real-Time Data Processing with Event Triggers

While ADF is primarily batch-oriented, it supports near real-time processing via event-based triggers. For example, when a new file lands in Azure Blob Storage, ADF can instantly trigger a pipeline to process it.

This is ideal for IoT data, log files, or customer transaction feeds that require timely analysis.

CI/CD Integration for DevOps

Modern data teams use DevOps practices. ADF supports CI/CD through:

- ARM templates for infrastructure-as-code

- Git integration (Azure Repos or GitHub)

- Pipeline deployment across dev, test, and production environments

This ensures consistency, version control, and faster delivery of data solutions.

AI-Powered Data Integration (Future Outlook)

Microsoft is integrating AI into ADF to enhance productivity. Features like auto-mapping in Data Flows use machine learning to suggest column mappings based on names and data types.

Future versions may include anomaly detection in pipelines, predictive scheduling, and natural language to pipeline generation—making data integration even more intelligent.

What is Azure Data Factory used for?

Azure Data Factory is used to create, schedule, and manage data pipelines that move and transform data across cloud and on-premises sources. It’s commonly used for ETL/ELT processes, data warehousing, migration, and analytics preparation.

Is Azure Data Factory serverless?

Yes, Azure Data Factory is a serverless service. You don’t manage the underlying infrastructure—Microsoft handles scaling, availability, and maintenance. You only pay for what you use.

How does ADF differ from Azure Synapse Pipelines?

Azure Synapse Pipelines is built on the same engine as ADF and offers identical capabilities. However, it’s tightly integrated with Azure Synapse Analytics, making it ideal for data warehousing workloads within the Synapse workspace.

Can I use Azure Data Factory for real-time streaming?

ADF is primarily designed for batch processing. For real-time streaming, consider Azure Stream Analytics or Azure Databricks with Structured Streaming. However, ADF can trigger near real-time workflows using event-based triggers.

How much does Azure Data Factory cost?

Pricing depends on usage: pipeline runs, data movement, and Data Flow execution. The first 1 million activity executions per month are free. Detailed pricing is available on the Azure Data Factory pricing page.

In summary, Azure Data Factory is a powerful, flexible, and secure platform for modern data integration. Whether you’re migrating legacy systems, building a data lake, or automating analytics pipelines, ADF provides the tools to succeed. Its seamless cloud integration, hybrid capabilities, and evolving AI features make it a cornerstone of any data strategy on Azure. As data continues to grow in volume and complexity, mastering azure data factory isn’t just an advantage—it’s a necessity.

Recommended for you 👇

Further Reading: